How to resolve "No space left on device" errors

Hello,

In the last few days we have been encountering the "An unexpected error has occurred during Workflow execution: 9003: An unexpected error has occurred during Workflow execution. No space left on device" error. We have gone through our installation and removed a lot of datasets that we no longer need which should have freed up a lot of space but this error is still occurring.

I have looked at our virtual machine (which was also upgraded to double it's size last month) and have found the following directories to be the source of must of our storage volumes (104G in total):

My first question is whether these index files are what you would expect or if they look inflated? For reference our repository is 183M:

Looking in the large directories above there appears to be a large number of files (about 50 in full_sort_index, 75 in value_to_rows, and over 700 in group_index). Again, is this an expected amount or is there a problem with files not being remove/deleted in our set up?

Our tech set up is a Linux VM running 2.14.1.169 (we want to upgrade soon), with just under 10m lines of data in our main data set. We have roughly 4 copies of this stored (raw, cleansed, clustered, harmonised) plus a few additional data sets. The VM runs: Operating system Linux (ubuntu 22.04); Size Standard E16as v5 (16 vcpus, 128 GiB memory).

Many thanks,

Ben

Comments

-

To ensure you are aware before running into this issue you should set up an Automation to notify you if disk space falls below a certain percentage: Data Quality user documentation | Automate actions

Stop the server then remove anything in the temp directory:

You can go to Settings > Storage and reduce the number of days temp data is being retained.

You might be able to delete some historic repository backups:

Above will likely resolve the issue, but you can check the data you are loading/storing is being compressed by default, which can reduce size significantly. https://docs.experianaperture.io/data-quality/hosted-aperture-data-studio/data-studio-objects/datasets/#data-compression

1 -

Hi @BAmos, your repository.db is not particularly large. Being in bytes the number of digits can be misleading, but 183Mb is not big. That said, without knowing how you use Data Studio, I would guess that most of it is job history. You can see the number of days retention in the Edit Environment dialog. I would recommend setting it as low as you need. Same goes for audit retention period.

The repository.db is tidied and compressed (removing deleted records etc) at server startup so if you've not restarted the server for a while this might help.

I am happy to take a look at what might be causing the increased size, but I'd need the file. Please let me know. But I don't think it is anything to be worried about.

For the indexes - it largely depends on the data size and what you're doing with them. Josh's response contains pretty much everything you need to know on this, but happy to answer any other questions.

Best regards,

Ian

1 -

Hi @Josh Boxer and @Ian Hayden

Thank you both for your input on this, it was very helpful and I was able to use the advice to resolve the issue last week.

If anyone else gets into this situation in the future here's the steps we took:

1) in the opt/ApertureDataStudio/resource/index folder we manual deleted the oldest indexes (we had a months worth of very large ones with multiple timestamps per day in some cases

2) after enough space was cleared we were able to restart ADS properly

3) in the system settings we reset the indexes to expire after 5 days (which is fine for our use case)5 -

Bringing together several different threads and comments into a set of steps I would suggest following as a starting point if you run out of disk space on your Aperture Data Studio DB disk:

- Stop the Aperture service if running

- D:\ApertureDataStudio\data\resource\temp -> you can remove anything in the temp directory without any side-effects.

- D:\ApertureDataStudio\resource\index folder -> manual delete the oldest indexes. These are created during workflow execution and are typically not needed again. If they are needed again but aren't present, the workflow simply recreates them on next execution

- Check for any large mdmp files in the Data Studio installation directory and delete these

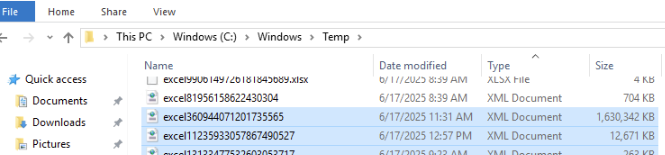

- Check for large "excel<number>.xml" files in C:\windows\temp. These may be created after data is downloaded from a "View" only as Excel file, and can be safely deleted. Note that from v3.1.8 this issue was resolved and the temp files are no longer retained.

- Review repository backups and backup settings. You may have old backups from previous upgrades that can be purged. Be careful not to remove recent backups.

- Start the Aperture service

- Go to Settings > Storage and review / reduce the number of days temp data, exported files and logs are retained, if appropriate.

- Go to Settings > Performance and review / reduce the index expiry time. This controls how long intermediate indexes (created during workflow execution) are retained

- Set up a low disk space alert.

- Profile > Manage Environments -> Edit. Review / reduce Job detail retention and Audit retention period (which can inflate the size of the repository.db)

- Review any large loaded data or snapshots which are no longer needed, and remove these (rather than deleting the Dataset you can simply remove the batch(es)). This would reduce space taken up by .\ApertureDataStudio\data\resource\source\dataset, where loaded datasets (including snapshots) are stored. By deleting a Batch (values) rather than a Dataset (values + schema), you retain the dataset schema and associations, such as usages in Workflows or Vies, sharing etc, and you can populate the Dataset with more data in future.

- Go to Settings > Performance and review the Compression settings used for loaded data, to achieve the necessary balance between workflow execution performance and disk space usage.

1 -

Good tips Henry, thanks

0