Duplicate Triggering of workflow with datadropzone

2 questions please:

When a file is dropped in the datadropzone, it gets removed almost instantly. Sometimes, we don't even see the file in the folder. So users think the file is not processed and so they submit the file again.

Q1. Is there a way to see if the file is picked up? (Especially for data consumers, who cannot see the Jobs menu). Is there a place where these files are stored after taking from the datadropzone?

Q2. How can we prevent the duplicate triggering of the same workflow? I can see that both workflows are executing and this might get bad results especially when the input dataset is changed in the middle of the process. Is there a way to say when workflow NOT started (In the notification to run the workflow)?

Thanks,

-George

Comments

-

for security files are disappeared once they are processed

External system dropzones can set the polling time so that the file would sit around for longer if that is a desirable approach that your users would not get confused https://community.experianaperture.io/discussion/857/aperture-data-studio-2-8-1

It sounds like you are using an Automation to run multiple Workflows when a Dataset gets updated. Automations trigger Workflows in parallel. Are the Workflows related? You could instead set the Automation to run a Schedule containing multiple Workflows which will run in sequence

Lastly, (and probably most simple) create a Scoreboard Chart as a Dashboard widget (that the Consumer would have access to) to show the timestamp of the latest file [note the Source metadata dropdown]

0 -

@Josh Boxer Thanks for the quick response. I shall use the last method mentioned for checking if the file is loaded. Thank you.

Do you have an answer for the Q2. I have a notification that trigger the workflow for the dataset when the dataset is loaded. So if the files are dropped twice, then the workflow will run twice. We can advise users not to drop more than once, but I think occasionally users may still drop file more than once and two jobs for the same workflow will run at the same time. Is there any way to check for a condition that the workflow is not running currently before triggering the workflow?

0 -

What is the issue with the Workflow running twice?

I dont really have an answer but some trial and error with something like:

- Automation 1: Data loaded AND Workflow completed within N mins

- Automation 2: Automation 1 time period expired (meaning Workflow still running)

0 -

@Josh Boxer Here is the scenario.(Jobs are triggered based on data dropped into the datadropzone.)

I have a workflow that checks if the data loaded from flat file to SAP worked ok. So it pulls in the input file, refresh SAP(MARA) table and join with input file to check if the fields are correct. The workflow then exports a report. This works fine for any file (Americas/EMEA/APJ) run by regions loading separate data into the same SAP Table. But if both Americas and EMEA send their files at the same time, and if the workflow runs twice, (Eg: one for Americas and one for EMEA), then one of the job fails.

Here is the error message: Unexpected errors have occurred during Workflow execution: 9003: An unexpected error has occurred during Workflow executionjava.lang.IllegalArgumentException: 0: row out of range.

I guess when one workflow attempt to join with MARA table, the other workflow empties the table and refresh, then this could happen. This is one example. I think there are many such situation when we need to restrict/queue the running of the jobs to avoid confusion. I can think of other example where the SAP table is refreshed by different workflows causing issues.

I have seen the use of Lock parameters in other systems so that one job holds the lock while running and then releases the lock after execution so the next job can acquire the lock. I think you need something like that for the job executions.

For now, we need to manually monitor the jobs so that both regions would not send the files at the same time. Let me know if you have a better way to manage this situation.

Thanks, George

0 -

How often are these files being dropped and how long does it take the Workflow to finish updating the SAP table?

0 -

The process is used for Integration of acquired companies. So when it is used, files would be dropped multiple times in a day. When no integration activity is going on the workflow may not be used for months.

My current workflow updates SAP table in 10 minutes. But there are some workflows that takes much more time to refresh big tables. (1-4 hours)

0 -

Hi George

Just re-reading back through the thread, not entirely sure I have fully understood the setup, but if multiple files are being dropped in the same dropzone then you probably want to prevent them overwriting each other. One thing you could try

Set the Dataset to Multi-batch (to prevent Data from being overwritten) and Allow Workflows to delete batches

Then set the Source step to take one Batch and to Delete the batch on completion

0 -

@Josh Boxer Yes, I think I have 3 problems. i.e. User submitting the file twice accidently, Users in regions submitting the files simultaneously, and also some other workflow refreshing the SAP tables when the workflow is running....

Changing all datasets to Multi batch would be a lot of work and it sounds complicated.

Is there a way to force aperture to take a copy of the input file and then work only with this copy so that in case the source file is updated, that would be ignored? Eg: If there is a Transform step at the very beginning, will it keep a copy of the source data so for further processing, Aperture would not check back at the source file. Is that a safe assumption?

0 -

No that is not a safe assumption. If you look at the Job details page you will see that workflows are optimised so that steps do not get processed linearly/sequentially.

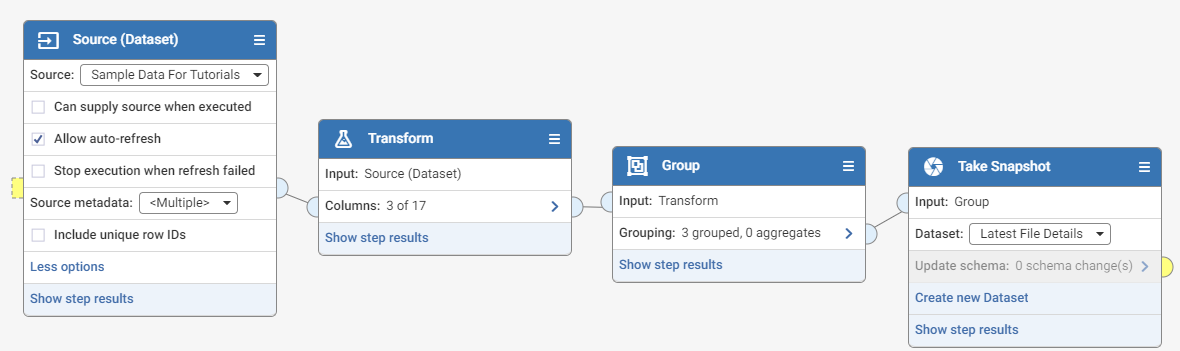

The Take snapshot step is the way to take a copy. Snapshots can also be set to multi-batch, so write the source to a multi-batch snapshot then use the snapshot as the source to the current workflow and delete batches once workflow completes as described above.

0 -

Hello @Josh Boxer

Thanks for your guidance. We are planning to manually control the jobs for now.

Could you please capture this as a future request. i.e.

a) Option to prevent running of the duplicate jobs

b) Option to allow jobs to wait (Job Queue) for resources/Logical lock. Example: For SAP Material tables verifications for MARA/MAKT/MARC. There are 3 jobs but all 3 jobs refresh MARA table. So if they all run at the same time, MARA table will be unstable i.e. occasionally getting empty before refresh. If there is a logical lock named MARA and any jobs running acquires the lock so that the other jobs will go in the queue. Once the job is run and the lock is released, then the next job can run.

Please let me know if you have any questions.

0 -

Thanks George I will discuss with the team

1

Categories

- All categories

- 17 Get started

- 471 Get involved

- 13 Support

- 269 Resources

- 3 Events

- Upcoming events

- 3 Event recaps

- 18 Ideas and roadmap

- 218 Community categories