Ensuring you always return the same duplicate ID with Find Duplicates

Find Duplicates returns consistent Cluster ID after each run. Consistency here applies to the sequence order of the input file. So if the same file is submitted to Find Duplicates, in the same order, then the Cluster ID will be the same on each run.

In some cases you may not be able to guarantee the order of the records being received but still want the same IDs generated after each run, unless a cluster has been merged or split due to a change in a linking/bridging record.

Here we will demonstrate how to assign an identifier to each record, which will persist through reprocessing in any sequence order, or with the addition of new records. We will refer to this identifier as a persistent ID.

Note: All workflows were built with Data Studio 1.6.0, please check the release notes for backward compatibility with the version you're running.

Properties of a good identifier

1) The ability to recreate the persistent ID with no prior processing knowledge

Each record will require a unique identifier, which will be used in determining the persistent identifier for the cluster. This ensures that a property of the record itself can be used to generate the persistent ID, rather than any prior processing knowledge. Using a unique ID for the record also ensures that this does not change even if other fields within record, like name, address etc., are updated.

2) Time based and ordered

Within a cluster or records we want to ensure that a method exists to always select the same ID as the persistent ID for the cluster. The simplest method for doing this is to assign each record an ID which tracks the order in which the records were created, and orders them. This way, we can select the lowest record ID as the persistent ID for the cluster.

The simplest example, where only data from one table is being processed, would be to use an AUTO INCREMENT ID.

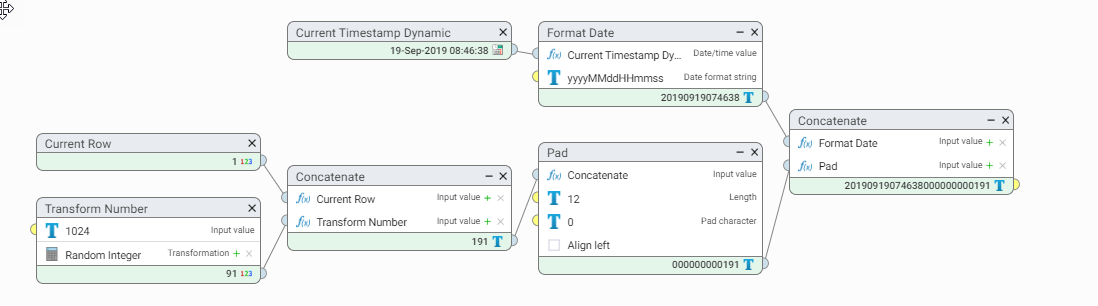

If the data is coming from multiple systems, or you are unable to assign an AUTO INCREMENT ID, then an ID can be generated within Data Studio, which will order records by the first time they were seen or processed. A transform step with a new column calculated as shown below will satisfy this requirement.

We use the date and time, but since we can process thousand of rows a second, we append the row number as well as a random integer.

3) Globally unique

The last attribute we require for an ID is one that we can guarantee to be globally unique across all the data sets we will be linking. If there will only ever be one, this is not a problem. However, if new data sources are likely to be added, the safest option is to generate a new globally unique ID within Data Studio.

The method shown above will do this if only one instance of Data Studio is being used and only one or two workflows will be ingesting data.

If multiple instances of Data Studio are being used, across multiple sites, a more robust and distributed method. A custom step is available which implements a variant of the Twitter Snowflake algorithm to solve this. Contact your account manager if you require this step.

Assigning a persistent identifier

We will assume that all records are coming from a single source system and that we have an auto increment ID assigned. To demonstrate this behavior I have used the GBR Find Duplicates Sample file and a randomly sorted version of the same file. I want the same persistent IDs for both files show below

When each of these files are run through Find Duplicates, the cluster IDs will be different for each cluster, as the sequence order has changed between them. The simple method for creating a persistent ID, is to choose minimum record ID for each cluster and then join that back to the original records.

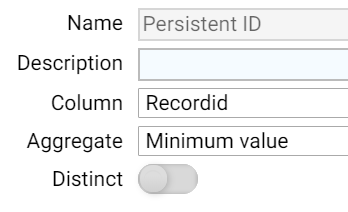

To select the minimum record ID, we simply group on the Cluster ID, and then add a new aggregate column called "Persistent ID" which is the minimum value of the "Record ID" column

We then perform a join the result back to the original input to append the Persistent ID to each record.

If we sort on the Persistent ID for both the original and randomized version, we can use a Multi-View to compare the results and see that they are both the same.

This full workflow, using the files above, is here

Determining changes to commit

When processing a source through Find Duplicates which may have had additions, updates or deletions, it is possible that existing persistent IDs will change. A new or updated record may bridge or link two existing records, merging the two clusters. Similarly, an update to or deletion of a linking/bridging record may cause two previously linked records or clusters to now split.

In each of these cases, more changes to IDs may be required than the records added/updated, but with large data sets we may not want to update all records.

The process to determine this is simply a case of comparing the Persistent ID as supplied, with the new one generated.

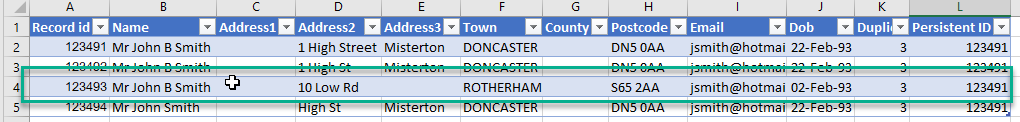

If we take the following example, which is a subset of the GBR Find Duplicates Sample file.

Using just the GBR Indvidual Default rules, which don't take into account Email or Dob, we find a loose match between these four records.

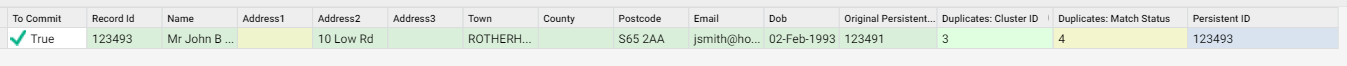

If we now receive an update for record 123493, as shown below, we would want to only update the IDs where the cluster ID has changed.

When we process this file, we rename the Persistent ID column to Original Persistent ID and process the file as previously discussed, assigning a persistent ID. We then compare the new ID to the old, and filter for records where they are not the same.

Which, in this case, gives us a single record.

Comments

-

hi @Carolyn I guess the question would be, that in merging the data from these multiple systems, would you consider the nomination of a particular or specific system as the master?

There are a couple of strategies that you could use here, depending on your answer that might guide a couple of ideas.

0 -

My view is that there are a couple of ways you could tackle this. The most commonly used one is to use the "matchstore" (derived from the Find Duplicates process) as your golden nominal store. This works, there are a number of implementations that do this. The advantage is of course, the fact that it is lightweight and well instrumented with APIs for searching and delta record processing. This is one approach. It does required the implementation of some technical bits to get it to all work.

The challenge with this approach is that large delta load processing may take some time (say you bring on a new data source); and really the clusterid becomes your persistent ID. The cluster ID is also only semi-permanent. The next time you run a full match from scratch, the existing Cluster ID that you might use, is discarded and new clusters are calculated. So while all this works, I personally don't like it.

A second approach you could consider is a new datastore away from the matchstore and away from Data Studio, say on SQLServer or in a data-lake. The contents of this is the result of the harmonize process where you effectively then calculate a secondary persistent ID. When I say calculate, I am suggesting that you use some method to calculate a globally unique identifier (GUID). In Data Studio you have the ability to convert a string value to a GUID using the MD5 hash. Your string could be layered from a number of key static attributes from the data. My preference would probably be :

- First Name

- Middle Name

- Last Name

- Date of Birth (if known)

Concatenate these and then use the hash to a new column to create the HASH-ID

While this is not super perfect, it at least gives you something unque that you can converge on. During matching, you would draw this data into the data as another data set and when you harmonize you always converge on this record as the dominant survivor, all other systems refer back to this one.

I'd be interested to hear what @Ian Buckle and @Akshay Davis think too.

0 -

@Carolyn i thought about this a little bit more and don't think there is any easier way to do this other than what has already been suggested.

I realize that the Cluster ID cannot be used as a unique id reliably since the changes from one run to another is unpredictable depending on the changes of the source files. It could be that even if the Cluster IDs are the same, it may represent a different value.

The Unique ID generator can generate a unique ID but not a persistent one. So you could use that step to generate a unique id for the particular row the first time you see it.

Alternatively, you can also also use a hash function to generate a unique ID based on a combination of unique elements like Name and DOB.

Using Transform to add a new Hash column.

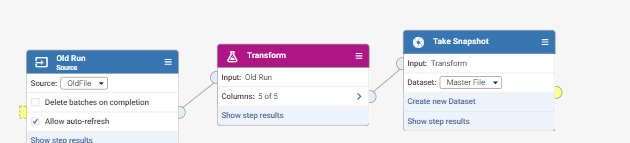

For simplicity sake, we will use the Hash code example. This is the Workflow 1 that will create your Master File as a start.

Workflow 2 will be something you need to run on an ongoing basis. When you have the second load of data, the Cluster IDs may have changed, so you need to determine how you can match the records from the second load to the Master File (first load). Note that there is actually no need to retain the Cluster ID as part of the Master File since it is not going to be used for any purposes anyway. In this example, i have retained it just to illustrate that it cannot be reliably used as a Unique Id.

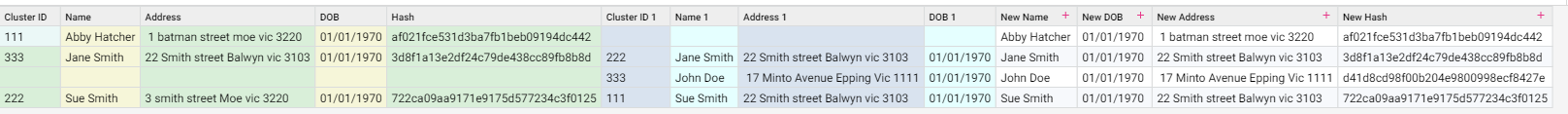

We continue to assume name and dob does not change since we have built our unique hash code based on this combination. We could do a full outer join with the Join step, joining on Name and DOB to obtain the following results:

Notice that the Cluster Id 333 appears in both loads, but represents a different value, so Cluster Id cannot be used as a Unique id.

From hereon, you can decide what needs to be updated/added/removed (if any) from the Master File.

Assuming you want to keep the old records in the Master file, add on the new records and update the existing records with the latest address, you can create the new name, dob, address, hash columns:

Example: New Address function. Only assign a new address if the new run contains an address.

Example: New Hash function. Only assign a new hash if the new run doesn't have a hash.

Once this is done, you can update map "New" columns to the Master File snapshot. So, your Master File is now updated and ready to be compared against the next (third run) using Workflow 2.

Hope this helps.

1