Schema-Flexible Configuration with JDBC (Apache Hive 6.0) External System

We've successfully configured an external system using Connection type: JDBC and DBMS: Apache Hive 6.0.

I’m looking for guidance on how to set up a Schema-Flexible configuration. Specifically:

- Is it possible to use Schema-Flexible JDBC connections to support workflows that export data to multiple schemas?

- For example, consider two databases:

- Database A with tables

T1andT2 - Database B with tables

T3andT4

My workflow needs to export data intoA.T1andB.T4.

Can this be achieved using a single Schema-Flexible JDBC external connection?

- Database A with tables

Any insights or examples would be greatly appreciated!

Comments

-

Hi Sneha

My colleague @Ian Buckle is suggesting the following:

Two separate databases:- Create two separate External System configurations for each database connection.

- Create separate dataset for each table

- Map and Export to the desired tables from one workflow

Multiple schemas in one Database:

- leave the schema field blank which will create a schema flexible configuration

- ensure the Force JDBC schema name is set to false

- ensure credentials has appropriate permissions to the Schemas in the database

- Create separate dataset for each table (Ensure to add Schema name in the dataset name to distinguish as Aperture doesn't do this natively during dataset configuration)

- Map and Export to the desired tables from one workflow

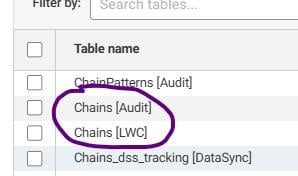

Schemas will appear like below for the same connection

Also to note, this is using the External System flexible schema functionality that introduced in 3.0.7.

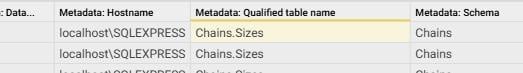

In regards to naming the default Dataset name should include both the schema name and table name, at least in the scenario where two schemas have the same table:The workaround is to refer to the Dataset metadata but that's only available in a workflow:

Hopefully this will help you.

0